Cochlea to categories: condensed version

Introduction: Our auditory system transforms sounds from a mixture of frequencies (e.g. high and low tones)

into a meaningful concept (e.g. a barking dog) (Figure 1). But when does this transition occur

and which brain regions are involved? In this work we address when and where acoustically-dominated

representations change to semantically-dominated representations in the human brain. Our multimodal

imaging approach reveals distributed and hierarchical aspects of this process.

Methods: We present 80 different natural sounds across 4 semantic categories

(voices, animals, objects, scenes) to 16 participants as we collect

temporally-resolved MEG and spatially-resolved fMRI brain responses.

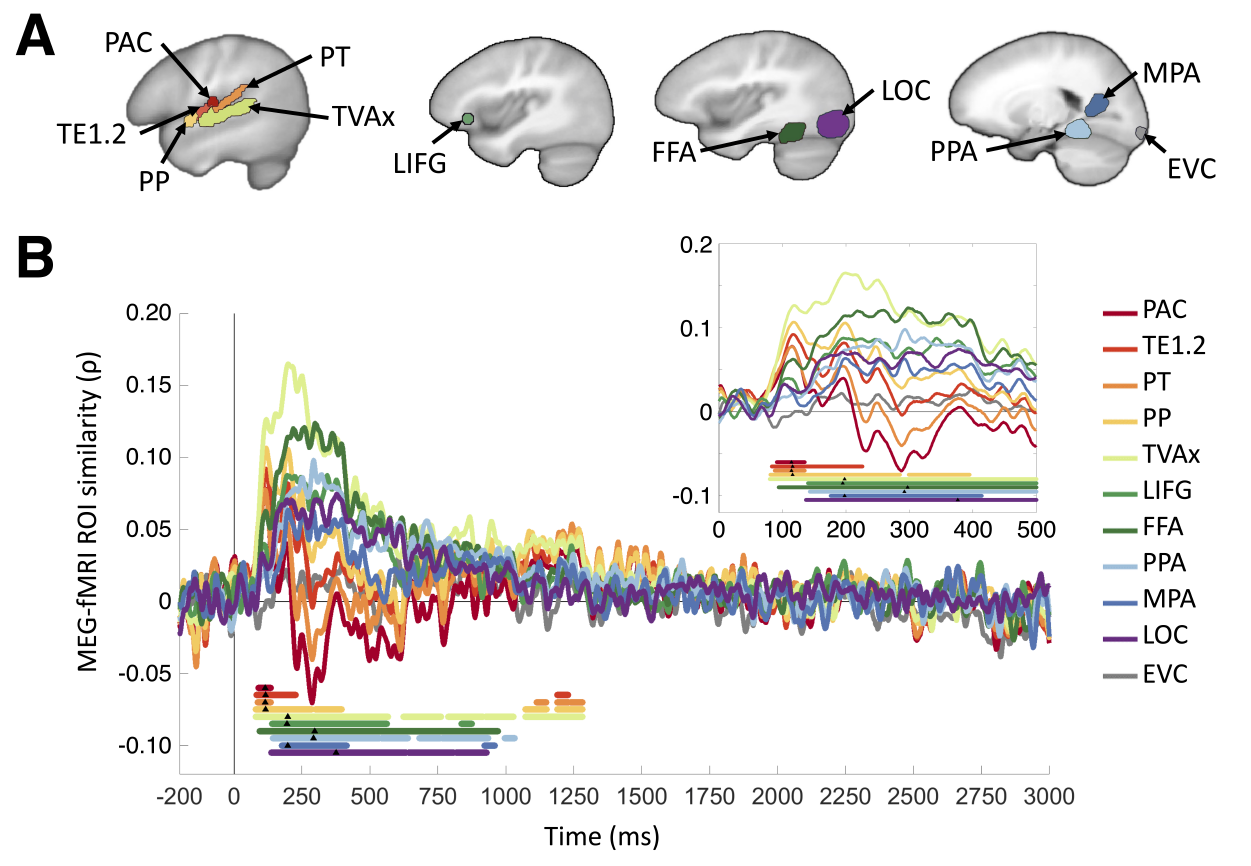

Using the MEG-fMRI Fusion method, we achieve high spatiotemporal resolution

to determine when and where the sounds are represented throughout the brain

(Figure 2B, also see Supplementary Movie 1). We compare our experimentally-collected representations against

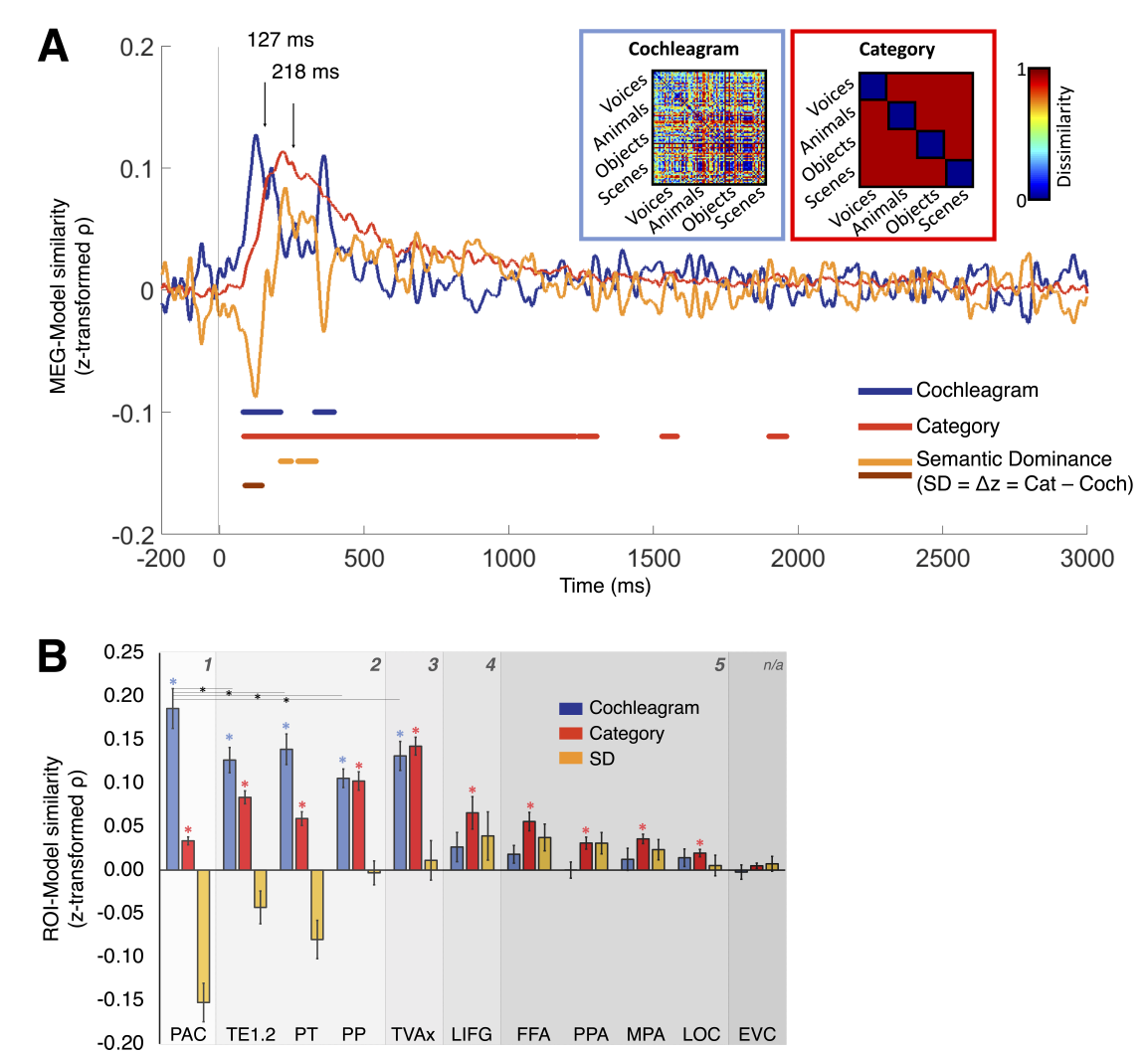

idealized models of a purely acoustic representation (based on cochleagram

differences) and a purely semantic representation (based on semantic

category membership) to measure which regions show preference to acoustic

features or semantic features (Figure 3A).

Results: We find that peak latencies in primary and non-primary

auditory regions (PAC, TE1.2, PT, and PP) were temporally indistinguishable

at ~115ms, voice-selective (TVAx and LIFG) and MPA were temporally indistinguishable significantly later

at ~200ms, and high-level visual regions (FFA, LOC, PPA) were temporally indistinguishable later still

at ~300ms (Figure 2AB). The sounds' acoustic features are most strongly

coded in primary auditory regions early in time, and the sounds' semantic

category features are most strongly coded in secondary auditory regions and

even high-level visual regions later in time (Figure 3AB). High-level auditory

and visual regions additionally show preference to certain sound categories.

Conclusion: Our findings show that across the auditory cortex (and especially in extra-auditory

regions), the temporal progression of brain responses strongly correspond to a region's

spatial distance from PAC and increasingly acoustic-to-semantic representations. At a

finer level within parts of the auditory cortex (especially the primary and non-primary

auditory ROIs), numerous regions simultaneously respond to bring about the

acoustic-to-semantic transformation. Thus, the human auditory cortex resembles

both a hierarchical processing stream and a distributed processing stream dissociable

by space, time, and content.

Acknowledgements

The study was conducted at the Athinoula A. Martinos Imaging Center, MIBR, MIT.

We thank Dimitrios Pantazis for helpful discussion, and Michele Winter for

help with stimulus selection and processing.

This work was funded by an Office of Naval Research Vannevar

Bush Faculty Fellowship to A.O. [grant number N00014-16-1

3116]. S.T. was supported by an NIH training grant to SmithKettlewell Institute [grant number T32EY025201] and by the

National Institute on Disability, Independent Living, and Rehabilitation Research [grant number 90RE5024-01-00].

No potential conflict of interest was reported by the author(s).

Data Availability

We are actively working on releasing the MEG and fMRI data here soon! Thank you for your patience.